Great Leveler has cutting-edge technology into its AI assistants through the adoption of the "Tree of Thoughts" (ToT) concept Asilisc Handy®. Developed by Princeton University researchers, this innovative approach is designed to significantly improve the problem-solving abilities of large language models (LLMs), Asilisc Handy® a core component of Great Leveler's AI assistants.

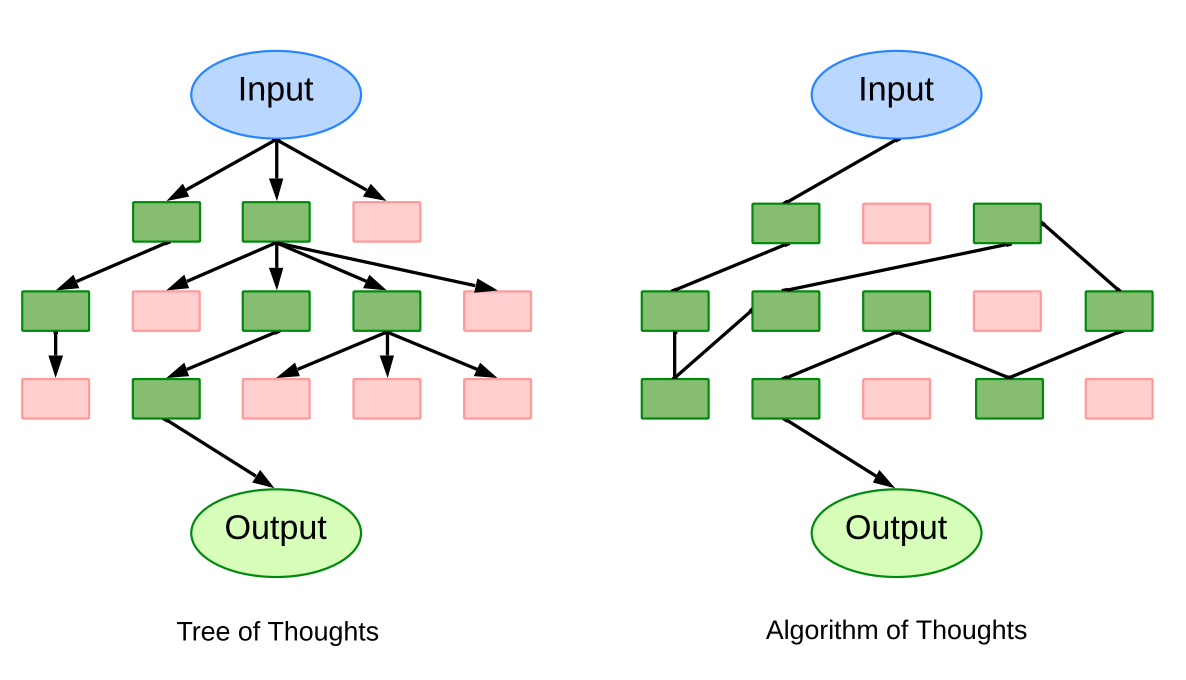

ToT allows an LLM to internally assess the advancement of its intermediate thoughts through a structured reasoning approach. It merges the LLM's capabilities of generating and assessing thoughts with search techniques, facilitating a systematic exploration of thoughts, including forward thinking and revisiting previous steps. ToT is particularly useful in scenarios demanding strategic foresight or where initial decisions are crucial.

Drawing inspiration from the human brain's trial-and-error method for complex reasoning tasks, ToT allows for revisiting previous steps when needed. It employs mathematical reasoning, breaking down thoughts into intermediate steps for intricate problem-solving through a tree search, involving multi-stage conversations. This use of search strategies through LLM self-assessment and thoughtful consideration is innovative, differing from prior search methods that were either pre-programmed or learned through experience.

Tests reveal that ToT significantly improves the problem-solving capabilities of language models. To integrate ToT into a software framework, an LLM is enhanced with additional components, including a prompting agent, a verification module, a memory unit, and a ToT overseer.

The problem-solving procedure commences with the user presenting the issue. The prompting agent then communicates this problem to the LLM, adding a prompt to stimulate the LLM to propose a preliminary solution rather than aiming for a complete solution immediately. The verification module then evaluates the proposed interim solution's validity. If valid, it is analyzed and stored in the memory unit. The prompting agent, using the memory unit's data, then generates a prompt to guide the LLM towards the next phase.

The ToT overseer continuously monitors the search process, deciding whether to persist with the current thought path or to go back to a previous step to explore alternate routes. This ability to backtrack from a plausible but potentially unfruitful interim solution allows the system to explore a wider array of solutions, enhancing its overall long-range reasoning capacity.

In comparison to self-supervised learning methods, self-play based reinforcement learning within this system accesses a more extensive solution space beyond the training examples provided, fostering significant improvements. The system has shown potential in developing strategies that may even surpass human expertise in some cases.